recently, I am working on a book information management system. There is a table marc that stores the information of books. There are more than 20 fields in it.

when importing data, it is required to check the duplicates according to 7 fields, and the amount of data is about 15 million to 20 million

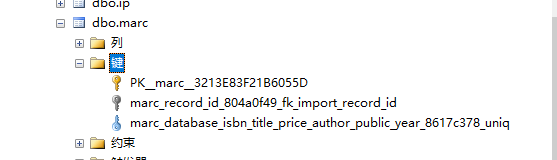

.the method currently used is to create a key based on these seven fields

the client checks duplicates by catching errors when importing data

what I want to ask now is whether there is a better solution, or whether setting triggers is feasible for this situation

because the index is too large after all the data is imported, now I"d like to see if there are other ways to avoid this

Database SQL server 2008R or MySql 5.8 + can be